How AI Is Transforming Data Center Power and Creating a $4 Billion Opportunity for Energy Storage (full report)

AI's Power Swings Are Driving Battery Adoption Beyond Traditional UPS

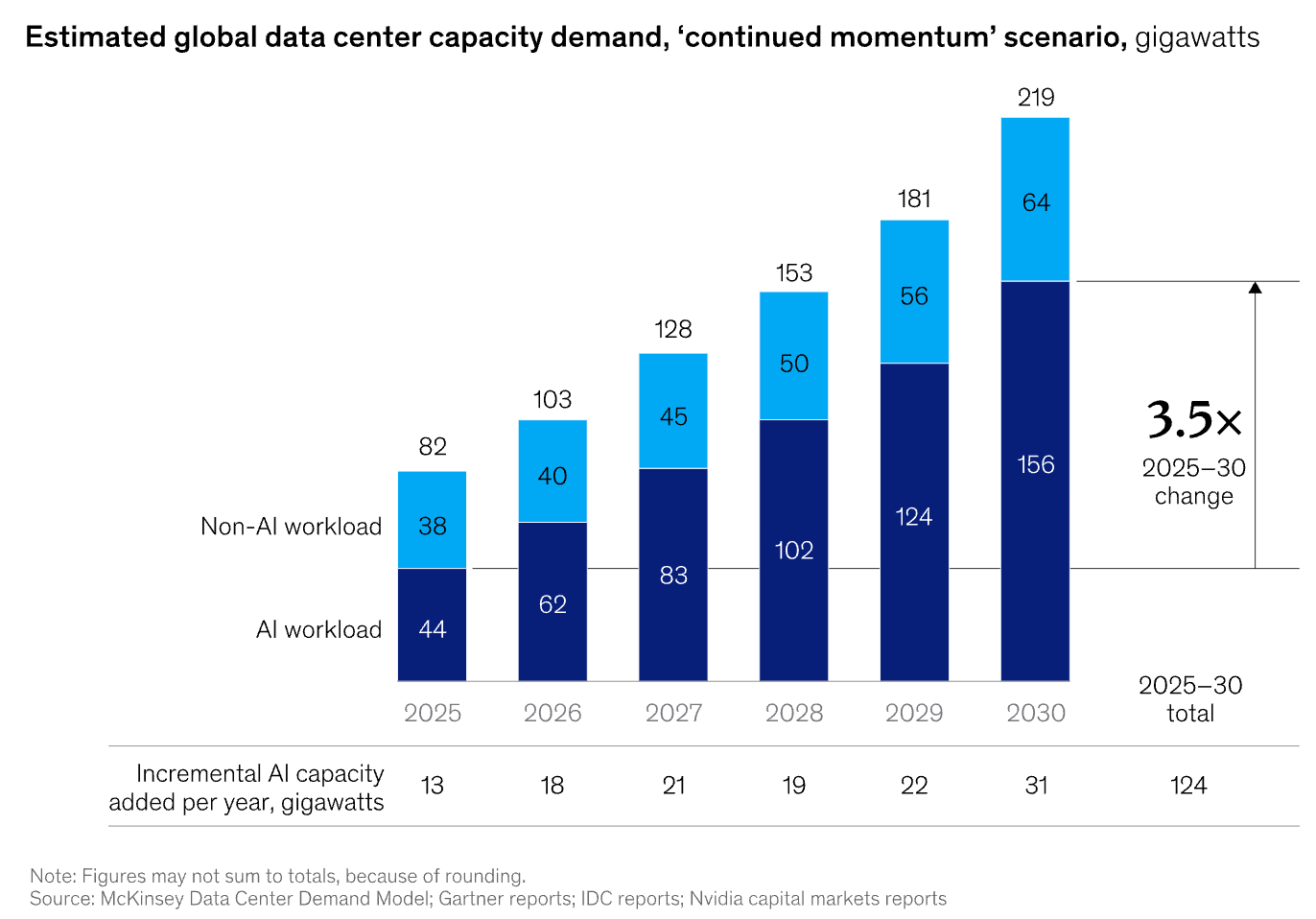

The United States is experiencing the largest electricity demand transformation since World War II, with data centers poised to consume 6.7-12% of total US electricity generation by 2028. Data center developers are rushing to bring online new facilities—the majority purpose built for AI—which is creating a massive demand for power1.

But here's what most investors miss: AI workloads don't just use more power—they use power completely differently than traditional data centers, creating both a $6.7 trillion total infrastructure opportunity and a specific $4 billion battery storage market that's growing at 7.7% annually2. The data center energy storage market alone is projected to reach $4.3 billion by 2034, driven by AI's unique power characteristics and the need for grid-interactive assets3.

AI workloads present unique power characteristics that distinguish them from traditional data center operations: highly variable demand patterns with load swings of 40% or more, concentrated power densities exceeding 150kW per rack, and computational bursts that can stress both grid connections and backup power systems. These dynamics are driving unprecedented adoption of battery energy storage systems (BESS) across the data center industry, transforming batteries from simple uninterruptible power supplies (UPS) into sophisticated grid-interactive assets.

The AI Power Problem No One Talks About

AI workloads create unprecedented power variability

Traditional data center workloads maintain relatively stable power consumption patterns, with variations typically limited to 10-15% of total capacity. Enterprise applications, cloud storage, and content delivery networks operate with predictable demand curves that legacy power infrastructure was designed to accommodate. AI training and inference workloads fundamentally disrupt these assumptions—and that's creating massive opportunities for anyone paying attention.

Machine learning model training can consume 40-60% more power during computation-intensive phases compared to idle periods, with transitions occurring within minutes or even seconds. NVIDIA's latest AI chips consume up to 300% more power than their predecessors, and industry forecasts suggest that global data center energy demand will double in the next five years5. These rapid load fluctuations create challenges for both grid-tied operations and traditional backup power systems—but they also create the perfect use case for advanced battery storage.

Graphics Processing Units (GPUs) designed for AI workloads exhibit fundamentally different power characteristics than Central Processing Units (CPUs). While CPU power consumption scales relatively linearly with utilization, GPU workloads can spike from 25% to 95% capacity instantaneously when neural network training begins. A single AI training cluster containing 25,000 H100 GPUs can shift power demand by 50-75 MW within minutes—equivalent to suddenly plugging in 50,000 homes. Try explaining that load profile to your utility provider!

Grid infrastructure wasn't designed for AI's power rollercoaster

Traditional grid connections assume steady baseload consumption with minimal variation. Utility interconnection agreements typically account for power factor corrections and modest demand fluctuations, but the 40% load swings characteristic of AI workloads exceed standard grid stability parameters. When hundreds of megawatts shift suddenly, local distribution systems experience voltage fluctuations, frequency deviations, and harmonic distortions that can affect other customers—and make utility engineers very unhappy. The harmonic problems impact not just the data center, but neighbors within many miles.

Tesla's experience powering AI clusters demonstrates the scale of this challenge. The company's Dojo supercomputer training system requires dedicated 50 MW substations with specialized power conditioning equipment to manage the rapid load transitions without destabilizing local grid operations6. This represents a 10x increase in power infrastructure complexity compared to traditional data centers of equivalent capacity.

Here's the thing data center developers increasingly recognize: grid connections alone cannot provide the power quality and responsiveness required for AI workloads. Even facilities with robust grid connections are implementing battery systems to buffer demand fluctuations, protect sensitive equipment from grid disturbances, and provide headroom for computational bursts that exceed contracted capacity. This isn't just backup power anymore—it's becoming the primary power management strategy.

Tesla's $10 Billion Energy Bet

Beyond Battery Backup: Six Revenue Streams

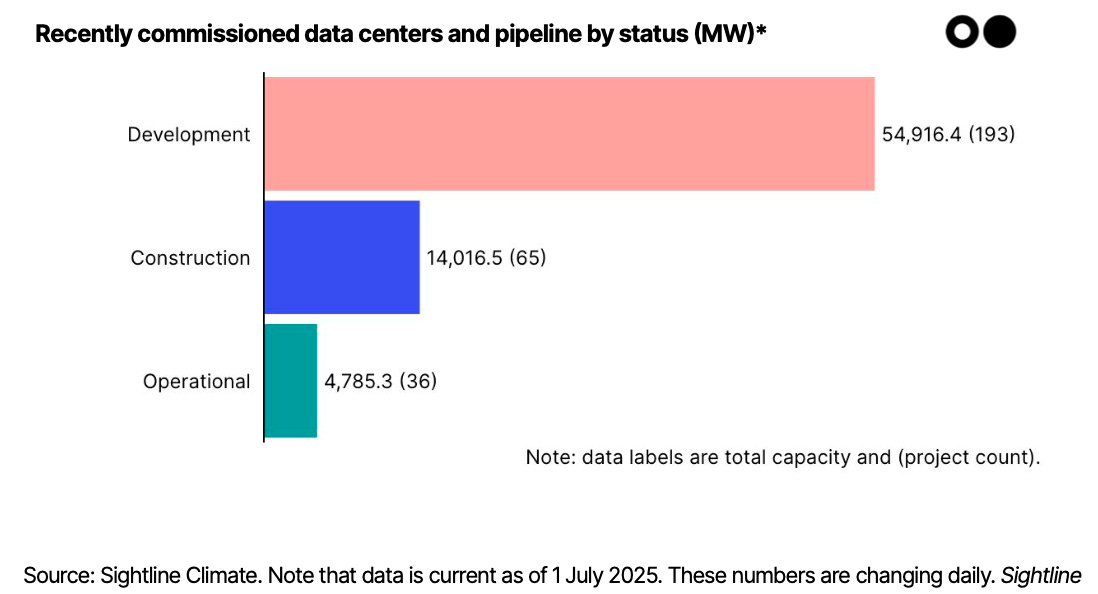

Data center developers are rushing to bring online new facilities—the majority purpose built for AI—which is creating a massive pipeline of power needs that just keep growing! Traditional data center UPS systems provide 10-15 minutes of backup power to bridge brief outages or enable graceful shutdowns. These systems, typically based on valve-regulated lead-acid (VRLA) batteries, operate at modest power levels (1-5 MW) and focus solely on uninterrupted operations rather than grid interaction or economic optimization.

Modern battery energy storage systems serve multiple functions simultaneously: demand response participation, frequency regulation services, energy arbitrage, capacity market participation, backup power value, and improved AI training efficiency. Tesla's Megapack systems, deployed across major data centers, can provide 2-4 hours of full facility backup while simultaneously offering frequency regulation services to grid operators7. Combined, these revenue streams can offset 40-60% of battery system costs over 10-year operational periods—making what used to be a cost center into a potential profit center.

The economic case for large-scale battery systems extends beyond reliability into serious money-making opportunities. Data centers equipped with substantial battery capacity can participate in wholesale electricity markets, earning revenue through ancillary services including frequency regulation, voltage support, and demand response. These revenue streams can offset 15-25% of battery system costs while improving overall facility power quality. Some forward-thinking developers are even designing their business models around these grid services revenues.

Tesla dominates but the competition is heating up

Tesla maintains global leadership with 15% market share and 31.4 GWh deployments in 2024, expecting 50%+ growth in 2025 through Megapack 3 MWh units8. The company's $10.1 billion energy segment revenue with 26.2% gross margins validates the economic opportunity in data center power infrastructure. This isn't just about cars anymore—it's about powering the AI revolution.

Tesla's integration advantages extend beyond hardware to software optimization that competitors struggle to match. The company's Autobidder platform enables autonomous participation in electricity markets, optimizing battery charge/discharge cycles to maximize revenue while maintaining backup power capabilities. Data centers using Tesla systems report 20-30% reductions in electricity costs through intelligent grid arbitrage and demand charge management9. When you can cut your electricity bill by a quarter while improving reliability, the ROI math becomes pretty compelling.

Elon Musk's xAI directly benefits from Tesla's battery technology and provides a real-world case study, with xAI spending "approximately $191.0 million during 2024 and $36.8 million through February 2025" on purchases of Tesla's Megapack products for its Memphis data center operations10. This vertical integration demonstrates how hyperscale AI developers are leveraging advanced battery systems to manage the extreme power variability of training workloads—and why they're willing to pay premium prices for systems that actually work.

The competitive landscape is getting interesting

Fluence's partnership with Excelsior Energy Capital for 2.2 GWh deployments and Sungrow's PowerTitan 3.0 systems with 684 Ah cells demonstrate rapid technology advancement, but these vendors lack the software integration and market participation capabilities that Tesla provides11. The hardware is getting commoditized, but the software and grid integration capabilities are becoming the real differentiators. However, Powin Energy's bankruptcy due to China tariff impacts highlights supply chain vulnerabilities affecting the entire sector—a reminder that this market isn't without risks.

Chinese battery manufacturers including CATL and BYD are aggressively targeting US data center markets, offering lithium iron phosphate (LFP) systems at 20-30% lower costs than Tesla's nickel-based chemistries. While LFP batteries provide longer cycle life and enhanced safety, they offer lower energy density, requiring larger installations for equivalent capacity. The cost advantage is real, but so is the space penalty.

European vendors including Northvolt and Saft focus on high-performance applications, providing battery systems optimized for the rapid cycling and high C-rates characteristic of AI workload management. These systems command premium pricing but offer superior performance for facilities prioritizing computational responsiveness over cost optimization. Different markets, different solutions—but all pointing toward massive growth in battery demand.

Power-First Development Revolution

Everything changed when power became the bottleneck

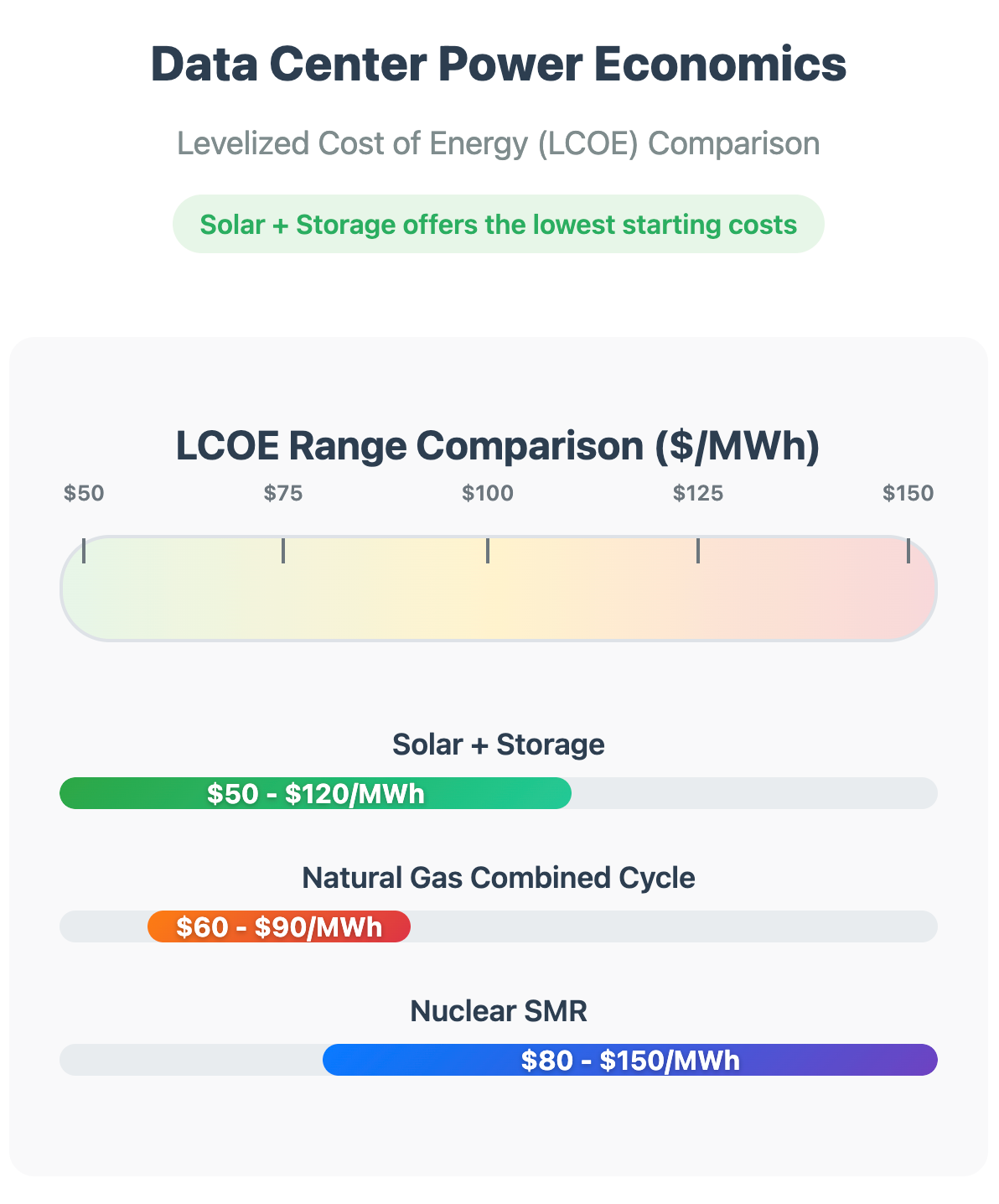

Power availability and grid access now dominate all other site selection considerations—and I mean completely dominate. Developers are offering generation capacity, grid services, and local infrastructure investment to secure utility approval. Median project investment has reached $800 million, averaging $5.5 million per MW for recent announcements, with AI-specific builds requiring 5-6x more capital than traditional enterprise facilities12. When power is your biggest constraint, suddenly every other factor becomes secondary.

Hyperscalers adopt different battery strategies based on their operational requirements and scale:

Big Tech (AWS, Google, Microsoft, Meta): Deploy utility-scale battery systems (50-200 MWh) for grid interaction and AI workload management. Microsoft leads with 21 announced projects, Google with 19, Amazon with 18, and Meta with 1213. These companies justify massive battery investments through AI training efficiency gains and grid service revenues.

Non-tech hyperscalers (Equinix, Digital Realty, CoreWeave): Focus on smaller, distributed battery systems (5-25 MWh) that provide UPS functionality while enabling tenant-specific power quality optimization. CoreWeave's GPU-focused facilities employ high-power density battery systems optimized for rapid response to AI workload fluctuations.

Enterprise and AI startups: Increasingly require battery-backed power as a prerequisite for AI workloads, with many refusing to locate in facilities lacking advanced energy storage capabilities.

Geographic clustering follows the smart money

Data center development increasingly concentrates in regions offering favorable battery storage policies and grid interconnection frameworks. Texas leads with its 23-day permitting timeline, ERCOT market structure enabling battery participation, and $5 billion state energy fund support14. The state's competitive electricity market allows data centers to monetize battery assets while Virginia's regulated utility structure limits revenue opportunities—a perfect example of how policy drives capital allocation.

California's energy storage mandate and Self-Generation Incentive Program (SGIP) provide up to $1.6 billion in incentives for battery deployments, making the state attractive despite restrictive gas generation policies15. Nevada's Clean Transition Tariff enables data centers to fund renewable generation paired with storage, creating integrated clean energy systems16. Smart developers are following the money—and the money is following the most battery-friendly policies.

AI Workload Management: The Clarke Energy Innovation

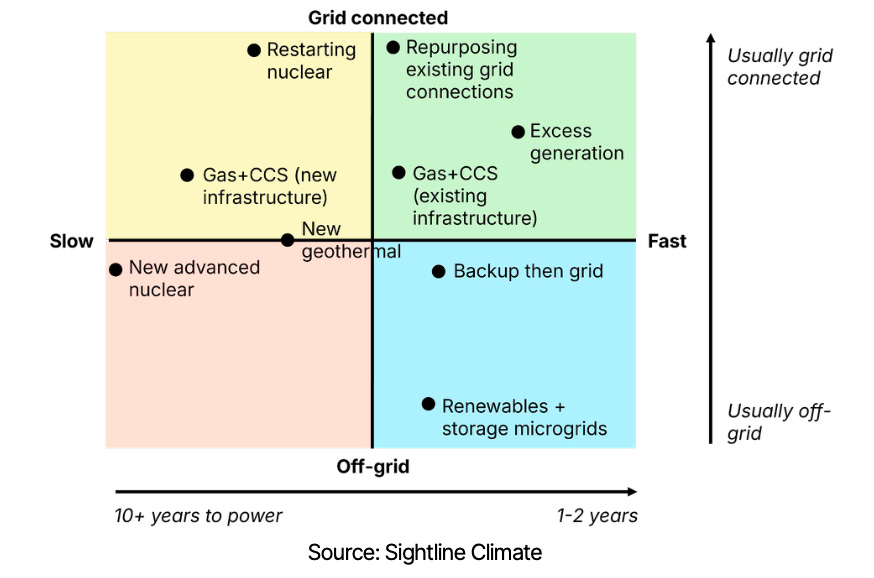

Variable load patterns drive hybrid power solutions

Clarke Energy has emerged as a pioneer in combined engine-battery systems specifically designed for AI workloads17. The company's hybrid solutions pair reciprocating engines with battery storage to address the unique challenges of variable AI power consumption while maintaining the rapid response capabilities essential for training operations.

Traditional reciprocating engines alone cannot respond quickly enough to AI load fluctuations. While engines can start within 3-5 minutes and reach full load within 10 minutes, AI workloads require power response within seconds. Battery systems bridge this gap, providing immediate power during computational bursts while engines ramp up to meet sustained demand.

Clarke Energy's systems typically combine 10-50 MW of reciprocating engine capacity with 5-20 MWh of battery storage, creating hybrid microgrids capable of operating independently from the utility grid while managing the extreme power variability of AI training workloads. These systems achieve 99.999% uptime through redundant power paths and intelligent load management.

Load balancing algorithms optimize AI performance

Advanced battery management systems use machine learning algorithms to predict AI workload patterns and pre-position energy storage for optimal response. These systems analyze historical training data, model complexity, and computational schedules to anticipate power demand patterns and optimize battery charge states accordingly.

GPU cluster power management requires sub-second response times to prevent computational disruptions that can destroy hours or days of training progress. Battery systems equipped with high-power inverters (4-6C discharge rates) can provide the instantaneous power delivery essential for maintaining AI model training continuity during grid disturbances or load transitions.

Coordinated engine-battery operation enables "seamless bursting" where AI training clusters can exceed their steady-state power allocation for short periods. Batteries provide the additional power during computational peaks while engines handle baseline consumption, maximizing GPU utilization without oversizing generation capacity.

Economic Analysis: Battery Storage ROI in AI Data Centers

Multiple revenue streams justify large-scale deployments

Data centers equipped with substantial battery capacity can generate revenue through six distinct channels: demand response payments, frequency regulation services, energy arbitrage, capacity market participation, backup power value, and improved AI training efficiency. Combined, these revenue streams can offset 40-60% of battery system costs over 10-year operational periods18. In markets with strong incentives, this can lead to a payback period of less than five years.

Keep reading with a 7-day free trial

Subscribe to Energy Industry Insights from Avanza Energy to keep reading this post and get 7 days of free access to the full post archives.